About anthropic: Claude, safety research and recent funding

Introduction: Why anthropic matters

Anthropic is an AI research organisation that has become a focal point in discussions about generative AI, safety and commercial deployment. Its stated mission to build “reliable, interpretable, and steerable” systems, and its flagship assistant Claude, place the company at the intersection of technological progress and public policy. Developments at Anthropic are relevant to readers because they influence how AI products are built, regulated and adopted across industry.

Main body: Company profile, products and recent events

Purpose and public materials

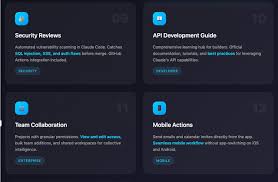

Anthropic publicly emphasises safety-first research. Company pages highlight initiatives such as a constitution and an Anthropic Academy intended to support learning with Claude and to frame the societal impacts of AI. The organisation presents itself as focused on areas including natural language, human feedback, scaling laws, reinforcement learning, code generation and interpretability.

Products and model family

Anthropic’s principal commercial offering is Claude, an AI assistant released in multiple iterations. Public sources reference several model names and releases, including Claude 4 and named variants such as Opus, Sonnet and Haiku in versions like Opus 4.1, Sonnet 4.5 and Opus 4.5. These successive releases indicate an ongoing product development cadence aimed at expanding capability and application scope.

Workforce, funding and partnerships

Reported figures for Anthropic’s size vary by source: LinkedIn lists a company size band of 501–1,000 employees and notes 4,097 associated members, while other reporting cites roughly 2,300 employees (2025). Financial disclosures shared on business platforms indicate a substantial Series F round dated October 2, 2025, with a reported raise of US$13.0 billion and investors including ICONIQ Capital and Lightspeed Venture Partners among others. The company has also announced deepened collaboration with Amazon to advance generative AI efforts.

Controversies and scrutiny

Anthropic has faced scrutiny in media reports, including claims that its technology was implicated in automated cyber activity attributed to foreign actors. Such reporting underscores the broader debate about governance, misuse risk and the need for robust safety and compliance measures.

Conclusion: Implications and what to watch

Anthropic’s combination of safety-focused research, rapid model releases and large-scale funding positions it as a consequential actor in AI. Readers should watch forthcoming product updates, partnerships with major cloud and enterprise players, and regulatory scrutiny — all of which will shape how generative AI tools are used and governed in the near term.